nextGen (spaces after)

Installation + Performance

next Gen/(SpacesAfter) explores iterations of designed intelligence and speculative interactions, reimagining models of climate change and conflict as transformative agents. Through audio-visual installation and live performance, the work blends generative scripting, a stained glass structure, and sound to evoke tension between destruction and renewal. Interactive projections and evolving sounds bridge technology, nature, and spirituality, inviting contemplation on humanity’s role in shaping emergent systems.

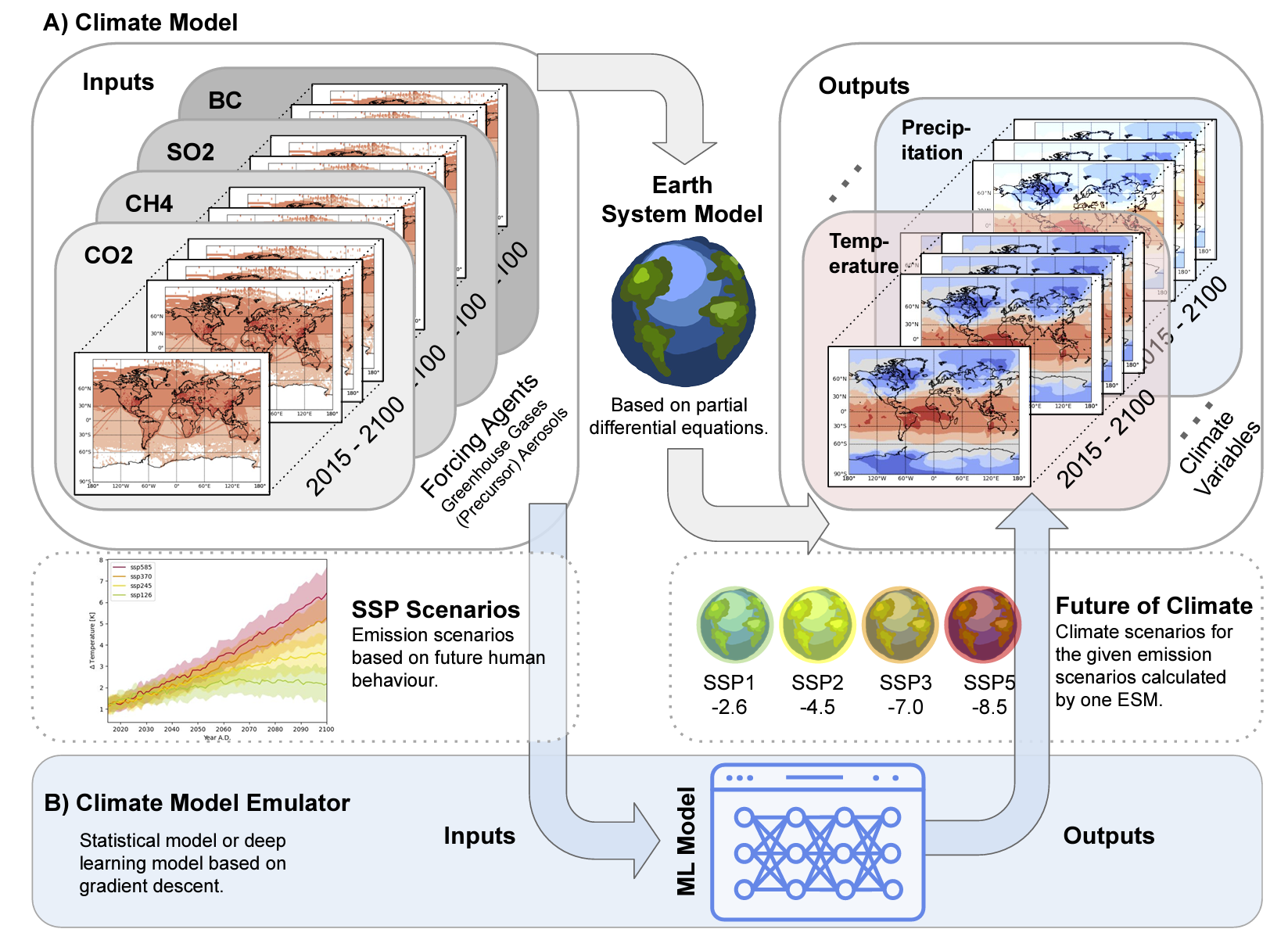

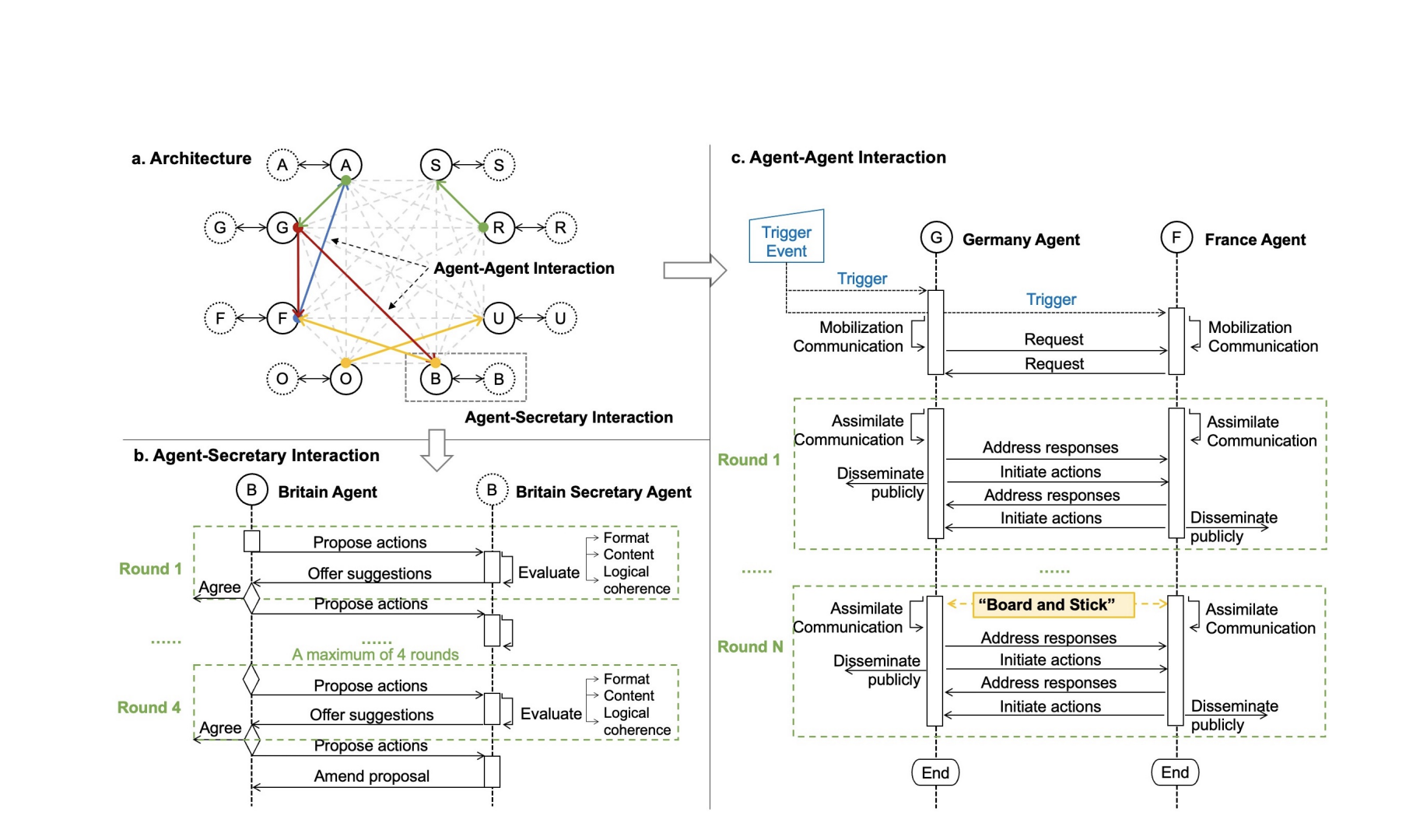

The project delves into the duality of generative AI and future modeling, exploring how technology can both catastrophize and transform human nature and the natural world. Sounds and visuals evoke a contemplative balance, speculating on autonomous spaces within this tension. Using scripting to bridge AI agents modeling conflict and natural phenomena, the work iterates through shifting imagery and sound that evolve into speculative forms, meditating on the interplay of structure and fluidity, function and transcendence, and the transition from potential disaster to generative renewal.

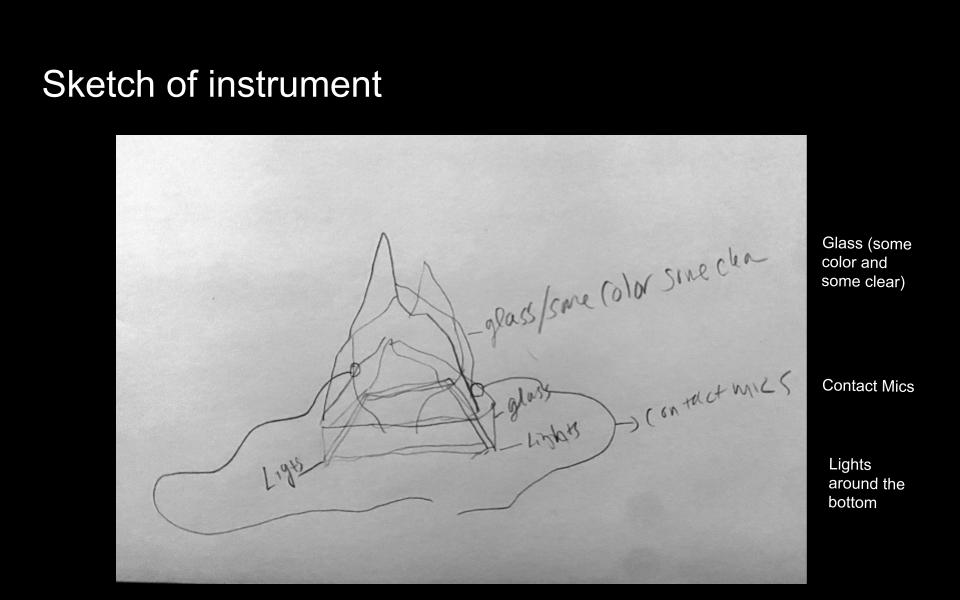

Central to the work is a large stained glass ‘instrument,’ illuminated from within and resonating with sound—autonomously and during live performance. The structure is informed by organic data imagery and algorithmic shape-shifting. This instrument serves as both a visual and auditory centerpiece.

Sound is the connective tissue of the installation, generated through a complex interplay of light and glass. Optical resistors respond to light, creating resonant tones captured by contact mics, while data sonification in a Max Patch amplifies and reinterprets these interactions. Field recordings will also mix with these sounds. During live performance, voice becomes part of the soundscape, layered with a choral AI digital and analog effects, merging human and machine in sonic expression.

Video projections of these organisms animate on extruded screens made from fly screens. The work invites the audience into a space where algorithmic designs, data, and emergent systems converge, reimagining the possibilities of consciousness and the role of art as a mediator between the organic, technological, and spiritual.

.

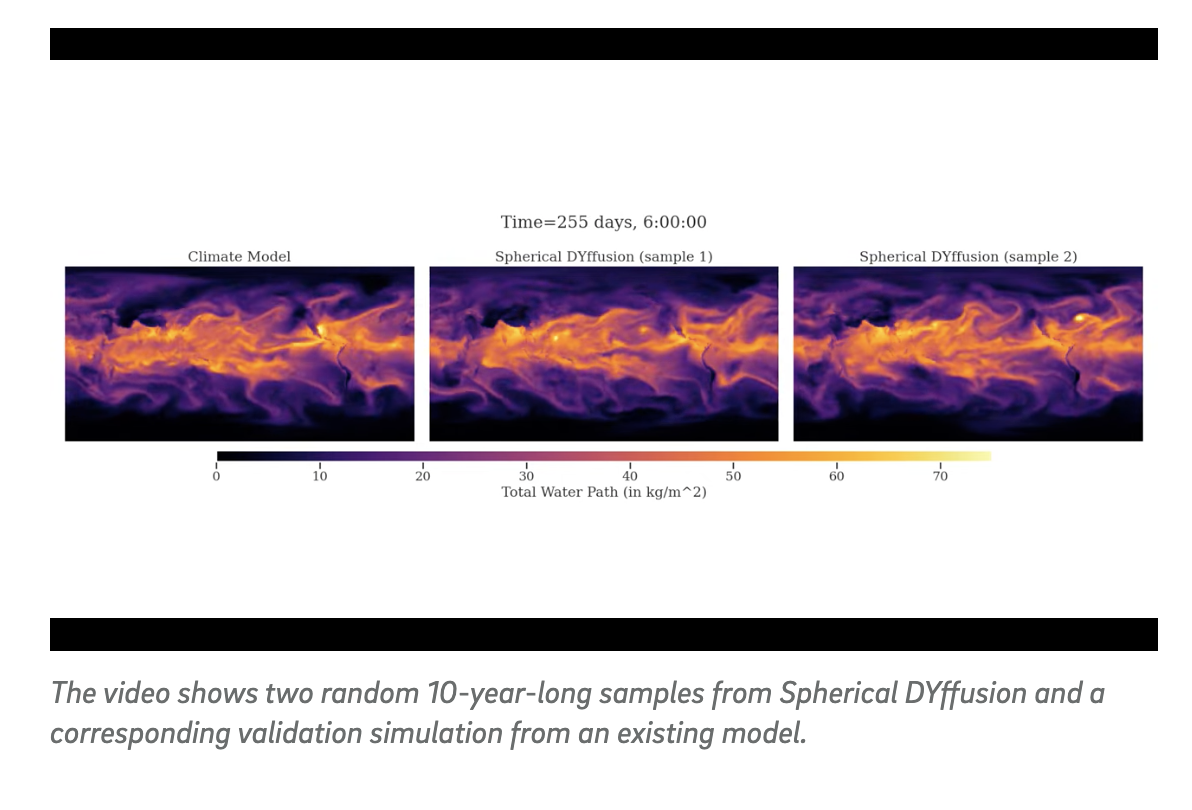

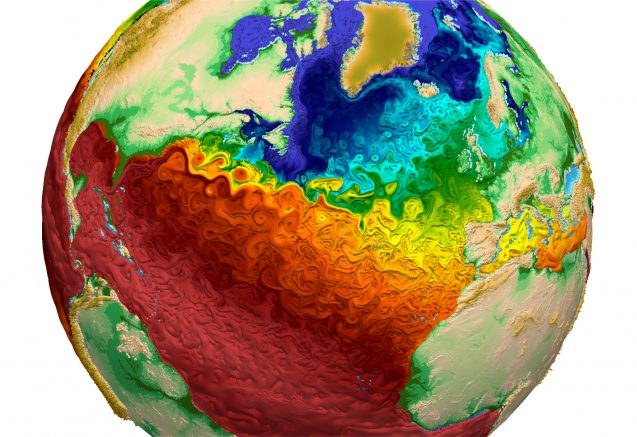

Research and iteration is now being conducted on AI methods and data sets used to track and predict climate change and conflict. Extraploating the methods and data from these models creates frameworks for the installation shapes, visual imagery and sound paterns. Using resources such as Climat Change AI, Google Deep Mind, and UN AI networks.

Sound sample for NextGen (Spaces after)

This sample contains vocals as it might be heard during performance and several channels of contact mics with various effects. It does not yet contain data sonification. The visual is a sketch of several data sources and algorithms simulating climate, ecology and infrastucture in transitions between emergent spaces and chaotic entropy.

Past related work as a examples of process and form informing this project.

Using contact mics with glass and projection on glass, performing Sila..

From the installation Sila, a generative audio-visual piece informed by data, thousands of images of ice from Greenland transposed using computer vision and water soluble paper that is dripped on over time by motors informed by the data that causes the piece to shift in form over time.